If you enjoy this kind of stuff and want to see more of it, please consider subscribing! It really helps me out and it’s free. Here’s a handy button:

Update: It seems the webapp is no longer supported on Heroku. I’ll preserve the content of the blog but am removing the link to the now defunct training webapp.

Post series:

(This post) Weird RL part 2: Training in the browser

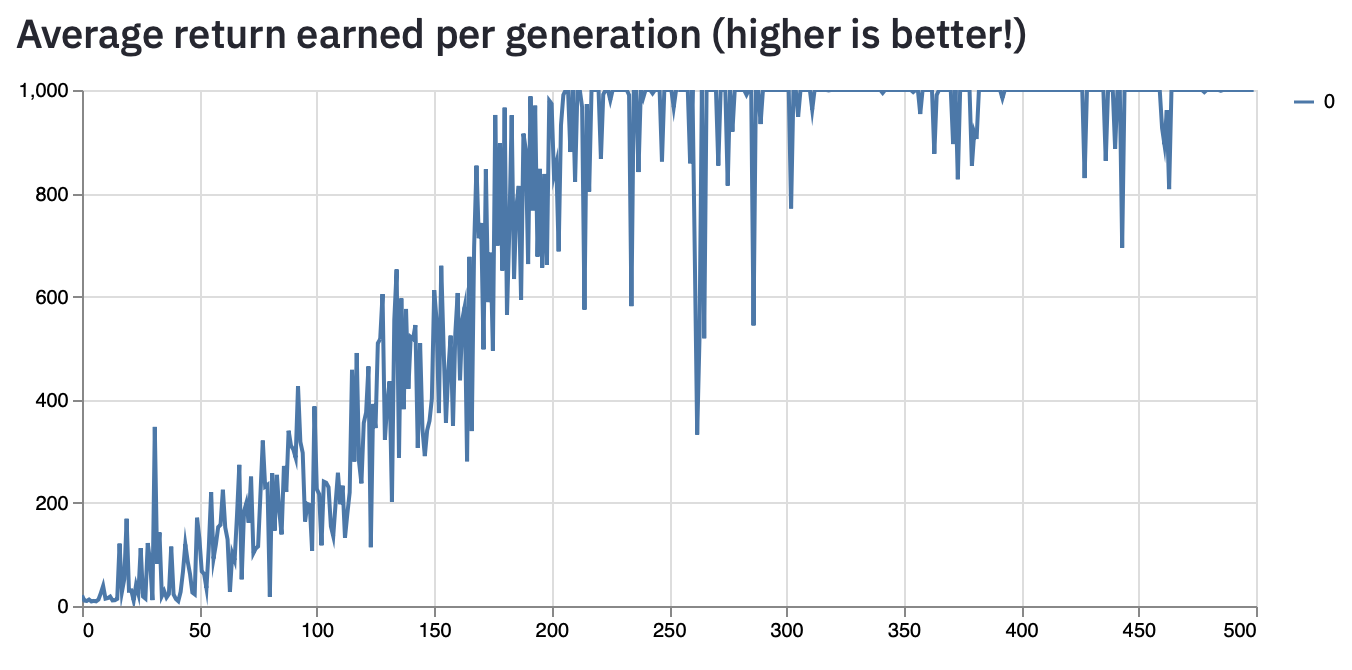

Last week, I wrote about my experiments with using the Optuna hyperparameter optimization library to search for tiny RL policies. I’m talking really tiny; these policies had between 5 and 96 parameters.

This week I wrote a Streamlit app to enable you to search for tiny policies too, from the comfort of your browser! There is a bit of an asterisk here because the browser tool only supports the Pybullet inverted pendulum style environments. This is because normally Gym environments need to create a virtual display to render, but Streamlit overrides their ability to do that. Pybullet, interestingly, will allow you to make a video of the agent without ever rendering it to your screen. So, this plays rather nicely with Streamlit and still allows my app to show you a video of your policy after training finishes.

The other reason for restricting the environments to just the inverted-pendulum style ones is because I wanted to add in some plots of the policy behavior that depended on being able to directly interpret some elements of the observations.

I felt that there is more value in providing a video and interesting figures than in just giving the option to train in yet another environment. But let me know if you disagree.

If you feel limited by the Streamlit app and want to run more experiments, my code is openly available on Github.

Interpreting Policy Behavior

Across the inverted pendulum environments, the first 5 elements of the observations your agent receives are as follows: The position of the cart on the track (x), the velocity of the cart on the track (x dot), the cosine of the angle of the pole on the cart (cos(θ)), the sine of the angle of the pole on the cart (sin(θ)), and the angular velocity of the pole on the cart (θ dot).

This knowledge lets us visualize the decisions a policy makes in response to these inputs.

When we plot position on the X axis, velocity on the Y axis, and fill in the grid squares heatmap-style, we get the above plots. But they can be pretty confusing to read and to interpret, so I’m going to do my best to explain how I read them.

Let’s start with the top plot (X-axis is “Cart X position” and Y-axis is “Cart X velocity”). If we look at the grid square (-0.6, 0.0), this is telling us that the cart was at X-position -0.6 on the track and had a velocity of 0 (all the negative numbers basically mean “to the left”). This square is blue-green, and if we look at the color-bar to the right of the plot, we see that blue-green is associated with a value of 0.0. It’s important to remember that in the inverted pendulum environments, the policy takes actions by applying forces to the cart. Thus, an action of 0.0 means that the policy did not apply any force to the cart. In contrast, an action of -1.0 or 1.0 means that the policy applied a force of 1 unit to the cart in order to move it to the left or the right, respectively.

Putting all of this together, the square at (-0.6, 0.0) in the top plot is telling us that when the cart was on the left hand side of the track and was not moving, the policy chose not to apply any force to it.

Now, this behavior might sound kind of weird, and depending on the environment, it would be. But these plots were generated in the double inverted pendulum environment, where the goal is to balance the pole on top of the cart. With that in mind, it kind of makes sense that the policy would want to stay stable and not do anything when it isn’t moving and the pole is balanced on top of it.

The policy seems to possibly have a bias towards the left hand side of the track; when it’s on the right side (X position 1.0) and the velocity is low (between 1 and -1), it seems to want to move to the left. This is an example of a weird behavior that the policy has picked up during training. Training for longer might fix it, but it likely just needs more parameters (this policy only has 9).

Hall of Fame

The idea behind the Hall of Fame is that people who train top performing policies in the Streamlit app can email me with the configuration of their run and with the final parameters of the policy. Then, I’ll verify that their policy is indeed a top performer and I’ll list them here. It’ll be up to them how much information about them I put down. If they want me to list their name, I will. Otherwise, I won’t.

Every time a policy is trained in the app, the result of the best policy is checked against the previous best result for that environment. If your policy beats the previous one, a message prints out telling you my email and telling you what information to send me. You’ll need to copy and paste it all down right away, because the next time you run a policy it will all be gone.

Current Hall of Fame

InvertedPendulumBulletEnv-v0; 1000.0 points

InvertedDoublePendulumBulletEnv-v0; 9351.51 points

InvertedPendulumSwingupBulletEnv-v0; 705.09 points

Link to Streamlit app.

Thanks for taking the time to read this, and I hope you have fun playing with the Streamlit app. If you liked it, consider subscribing (it’s free!)

And share your thoughts with me in the comments. If you know people that will enjoy this article, please share it with them. ✌️