Hot Topics #4 (June 20, 2022)

Planning from pixels, real time volumetric rendering, prostate cancer treatment personalization, and protein modelling.

Editor’s Note: Thanks to everyone who has been reading this newsletter for its first month being published! In the future, if you have a paper coming out and want it highlighted here, send me an email at jfpettit@gmail.com

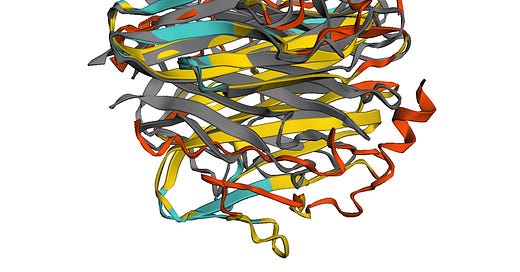

Diffusion probabilistic modeling of protein backbones

in 3D for the motif-scaffolding problem; Trippe et al.; June 8, 2022

Abstract: Construction of a scaffold structure that supports a desired motif, conferring protein function, shows promise for the design of vaccines and enzymes. But a general solution to this motif-scaffolding problem remains open. Current machine-learning techniques for scaffold design are either limited to unrealistically small scaffolds (up to length 20) or struggle to produce multiple diverse scaffolds. We propose to learn a distribution over diverse and longer protein backbone structures via an E(3)-equivariant graph neural network. We develop SMCDiff to efficiently sample scaffolds from this distribution conditioned on a given motif; our algorithm is the first to theoretically guarantee conditional samples from a diffusion model in the large-compute limit. We evaluate our designed backbones by how well they align with AlphaFold2-predicted structures. We show that our method can (1) sample scaffolds up to 80 residues and (2) achieve structurally diverse scaffolds for a fixed motif.

AI-based structure prediction empowers integrative structural analysis of human nuclear pores; Mosalaganti et al.; June 10, 2022

Abstract: The eukaryotic nucleus protects the genome and is enclosed by the two membranes of the nuclear envelope. Nuclear pore complexes (NPCs) perforate the nuclear envelope to facilitate nucleocytoplasmic transport. With a molecular weight of ∼120 MDa, the human NPC is one of the largest protein complexes. Its ~1000 proteins are taken in multiple copies from a set of about 30 distinct nucleoporins (NUPs). They can be roughly categorized into two classes. Scaffold NUPs contain folded domains and form a cylindrical scaffold architecture around a central channel. Intrinsically disordered NUPs line the scaffold and extend into the central channel, where they interact with cargo complexes. The NPC architecture is highly dynamic. It responds to changes in nuclear envelope tension with conformational breathing that manifests in dilation and constriction movements. Elucidating the scaffold architecture, ultimately at atomic resolution, will be important for gaining a more precise understanding of NPC function and dynamics but imposes a substantial challenge for structural biologists.

Deep Hierarchical Planning from Pixels; Hafner et al.; June 8, 2022

Abstract: Intelligent agents need to select long sequences of actions to solve complex tasks. While humans easily break down tasks into subgoals and reach them through millions of muscle commands, current artificial intelligence is limited to tasks with horizons of a few hundred decisions, despite large compute budgets. Research on hierarchical reinforcement learning aims to overcome this limitation but has proven to be challenging, current methods rely on manually specified goal spaces or subtasks, and no general solution exists. We introduce Director, a practical method for learning hierarchical behaviors directly from pixels by planning inside the latent space of a learned world model. The high-level policy maximizes task and exploration rewards by selecting latent goals and the low-level policy learns to achieve the goals. Despite operating in latent space, the decisions are interpretable because the world model can decode goals into images for visualization. Director outperforms exploration methods on tasks with sparse rewards, including 3D maze traversal with a quadruped robot from an egocentric camera and proprioception, without access to the global position or top-down view that was used by prior work. Director also learns successful behaviors across a wide range of environments, including visual control, Atari games, and DMLab levels.

Prostate cancer therapy personalization via multi-modal deep learning on randomized phase III clinical trials; Esteva et al.; June 8, 2022

Abstract: Prostate cancer is the most frequent cancer in men and a leading cause of cancer death. Determining a patient’s optimal therapy is a challenge, where oncologists must select a therapy with the highest likelihood of success and the lowest likelihood of toxicity. International standards for prognostication rely on non-specific and semi-quantitative tools, commonly leading to over- and under-treatment. Tissue-based molecular biomarkers have attempted to address this, but most have limited validation in prospective randomized trials and expensive processing costs, posing substantial barriers to widespread adoption. There remains a significant need for accurate and scalable tools to support therapy personalization. Here we demonstrate prostate cancer therapy personalization by predicting long-term, clinically relevant outcomes using a multimodal deep learning architecture and train models using clinical data and digital histopathology from prostate biopsies. We train and validate models using five phase III randomized trials conducted across hundreds of clinical centers. Histopathological data was available for 5654 of 7764 randomized patients (71%) with a median follow-up of 11.4 years. Compared to the most common risk-stratification tool—risk groups developed by the National Cancer Center Network (NCCN)—our models have superior discriminatory performance across all endpoints, ranging from 9.2% to 14.6% relative improvement in a held-out validation set. This artificial intelligence-based tool improves prognostication over standard tools and allows oncologists to computationally predict the likeliest outcomes of specific patients to determine optimal treatment. Outfitted with digital scanners and internet access, any clinic could offer such capabilities, enabling global access to therapy personalization.

Robust deep learning based protein sequence design using ProteinMPNN; Dauparas et al.; June 4, 2022

Abstract: While deep learning has revolutionized protein structure prediction, almost all experimentally characterized de novo protein designs have been generated using physically based approaches such as Rosetta. Here we describe a deep learning based protein sequence design method, ProteinMPNN, with outstanding performance in both in silico and experimental tests. The amino acid sequence at different positions can be coupled between single or multiple chains, enabling application to a wide range of current protein design challenges. On native protein backbones, ProteinMPNN has a sequence recovery of 52.4%, compared to 32.9% for Rosetta. Incorporation of noise during training improves sequence recovery on protein structure models, and produces sequences which more robustly encode their structures as assessed using structure prediction algorithms. We demonstrate the broad utility and high accuracy of ProteinMPNN using X-ray crystallography, cryoEM and functional studies by rescuing previously failed designs, made using Rosetta or AlphaFold, of protein monomers, cyclic homo-oligomers, tetrahedral nanoparticles, and target binding proteins.

Papers on machine learning for proteins; Kevin Yang; May 30, 2022

Background: We recently released a review of machine learning methods in protein engineering, but the field changes so fast and there are so many new papers that any static document will inevitably be missing important work. This format also allows us to broaden the scope beyond engineering-specific applications. We hope that this will be a useful resource for people interested in the field.

To the best of our knowledge, this is the first public, collaborative list of machine learning papers on protein applications. We try to classify papers based on a combination of their applications and model type. If you have suggestions for other papers or categories, please make a pull request or issue!

Adversarial Unlearning: Reducing Confidence Along Adversarial Directions; Setler et al.; June 3, 2022

Abstract: Supervised learning methods trained with maximum likelihood objectives often overfit on training data. Most regularizers that prevent overfitting look to increase confidence on additional examples (e.g., data augmentation, adversarial training), or reduce it on training data (e.g., label smoothing). In this work we propose a complementary regularization strategy that reduces confidence on self-generated examples. The method, which we call RCAD (Reducing Confidence along Adversarial Directions), aims to reduce confidence on out-of-distribution examples lying along directions adversarially chosen to increase training loss. In contrast to adversarial training, RCAD does not try to robustify the model to output the original label, but rather regularizes it to have reduced confidence on points generated using much larger perturbations than in conventional adversarial training. RCAD can be easily integrated into training pipelines with a few lines of code. Despite its simplicity, we find on many classification benchmarks that RCAD can be added to existing techniques (e.g., label smoothing, MixUp training) to increase test accuracy by 1-3% in absolute value, with more significant gains in the low data regime. We also provide a theoretical analysis that helps to explain these benefits in simplified settings, showing that RCAD can provably help the model unlearn spurious features in the training data.

Volumetric Bundle Adjustment for Online Photorealistic Scene Capture; Ronald Clark; June 2022

Abstract: Efficient photorealistic scene capture is a challenging task. Current dense SLAM systems can operate very efficiently, but images generated from the models captured by these systems are often not photorealistic. Recent approaches based on neural volume rendering can render novel views at high fidelity, but they often require a long time to train, making them impractical for applications that require real-time scene capture. We propose a method than can reconstruct photorealistic volumes in near realtime.

ProteInfer: deep networks for protein functional inference; Sanderson et al.; October 6, 2021

Abstract: Predicting the function of a protein from its amino acid sequence is a long-standing challenge in bioinformatics. Traditional approaches use sequence alignment to compare a query sequence either to thousands of models of protein families or to large databases of individual protein sequences. Here we instead employ deep convolutional neural networks to directly predict a variety of protein functions – EC numbers and GO terms – directly from an unaligned amino acid sequence. This approach provides precise predictions which complement alignment-based methods, and the computational efficiency of a single neural network permits novel and lightweight software interfaces, which we demonstrate with an in-browser graphical interface for protein function prediction in which all computation is performed on the user’s personal computer with no data uploaded to remote servers. Moreover, these models place full-length amino acid sequences into a generalised functional space, facilitating downstream analysis and interpretation. To read the interactive version of this paper, please visit https://google-research.github.io/proteinfer/

ProteinMPNN; This model takes as input a protein structure and based on its backbone predicts new sequences that will fold into that backbone. Optionally, we can run AlphaFold2 on the predicted sequence to check whether the predicted sequences adopt the same backbone (WIP).

Generating molecular graphs by WGAN-GP; WGAN-GP with R-GCN for the generation of small molecular graphs 🔬

Lightning Apps; The Lightning App Gallery contains industry-leading Lightning Apps curated by our development team. Browse through a list of Lightning Apps developed by the community for the community. There’s a Lightning App for a huge variety of use cases, from research to enterprise and everything in between. The best part? Lightning Apps are open-source by default, and are designed to be repurposed, extended, and published back into the community. We’re stronger together, right?

LabML Dynamic Hyperparameters; Hyper-parameters control the learning process of models. They are set before training starts, either by intuition or a hyper-parameter search. They either stay static or change based on a pre-determined schedule. We are introducing dynamic hyper-parameters which can be manually adjusted during the training based on model training stats.